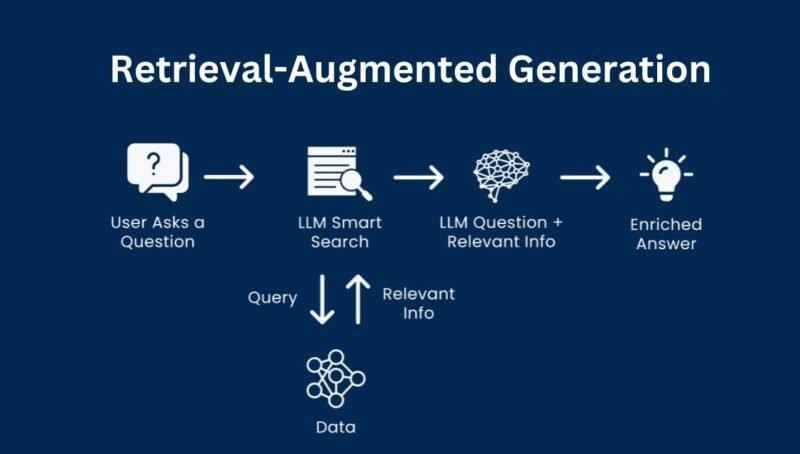

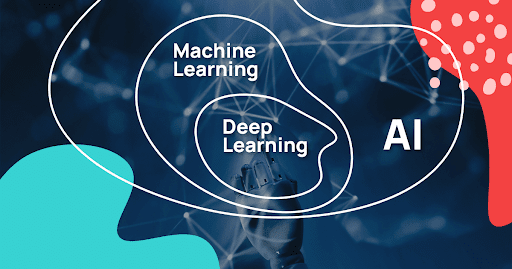

The field of Natural Language Processing (NLP) has witnessed tremendous growth in recent years, with Large Language Models (LLMs) being at the forefront of this revolution. One of the key techniques that has contributed to the success of LLMs is RAG (Retrieve, Augment, Generate). In this blog post, we’ll delve into the world of RAG, exploring its components, benefits, and applications.

What is RAG?

RAG is a technique used in LLMs to improve their performance and efficiency. It consists of three main components:

-

Retrieve: This stage involves retrieving relevant information from a knowledge base or database.

-

Augment: In this stage, the retrieved information is augmented with additional context or information.

-

Generate: The final stage involves generating text based on the augmented information.

Benefits of RAG

-

Improved accuracy: RAG enables LLMs to provide more accurate responses by leveraging relevant information from knowledge bases.

-

Increased efficiency: By retrieving and augmenting information, RAG reduces the computational resources required for text generation.

-

Enhanced context understanding: RAG allows LLMs to better understand the context of the input prompt, leading to more informative and relevant responses.

Applications of RAG

-

Question Answering: RAG is particularly useful in question answering tasks, where it can retrieve relevant information and generate accurate answers.

-

Text Summarization: RAG can be used to summarize long documents by retrieving key points and generating a concise summary.

-

Chatbots: RAG can be integrated into chatbots to provide more informative and engaging responses to user queries.

How RAG Works

Retrieve

-

Knowledge Base: A knowledge base is used to store relevant information.

-

Query: A query is generated based on the input prompt.

-

Retrieval: The knowledge base is queried to retrieve relevant information.

Augment

-

Contextualization: The retrieved information is contextualized to better understand the input prompt.

-

Additional Information: Additional information is added to the retrieved information to provide more context.

Generate

-

Text Generation: The augmented information is used to generate text that responds to the input prompt.

RAG Table

| Stage | Description | Benefits |

|---|---|---|

| Retrieve | Retrieves relevant information from a knowledge base | Improved accuracy, increased efficiency |

| Augment | Augments retrieved information with additional context | Enhanced context understanding, improved accuracy |

| Generate | Generates text based on augmented information | Improved accuracy, increased efficiency |

Suggested Video and Image Ideas

-

Create a video tutorial on how to implement RAG in LLMs.

-

Develop an infographic highlighting the benefits and applications of RAG.

-

Use images or diagrams to illustrate the RAG process and its components.

Conclusion

RAG is a powerful technique that has revolutionized the field of NLP and LLMs. By leveraging knowledge bases and augmenting information, RAG enables LLMs to provide more accurate and informative responses. As the field of NLP continues to evolve, RAG is likely to play an increasingly important role in shaping the future of language models.

Actionable Takeaways

-

Implement RAG in your LLM to improve its accuracy and efficiency.

-

Use knowledge bases to retrieve relevant information and augment it with additional context.

-

Experiment with different RAG architectures to find the best approach for your specific use case.