OpenAI, a leader in artificial intelligence research, is reportedly facing new challenges in advancing its models. According to a recent report from The Information, OpenAI’s forthcoming model, codenamed Orion, might not represent as dramatic an improvement as past upgrades, like the leap from GPT-3 to GPT-4. While Orion is said to outperform existing models in certain respects, insiders noted a noticeable slowdown in the rate of improvement. In some areas, particularly in coding, Orion may not significantly outperform previous models.

This emerging challenge has prompted OpenAI to devise new strategies to sustain advancements in its flagship models. In response, the company has created a foundations team dedicated to exploring methods for improving AI models despite diminishing sources of fresh training data. Some of these strategies reportedly include training Orion with synthetic data generated by AI models themselves and optimizing models during the post-training phase.

The Challenge of Slowing AI Model Advancements

AI models like those developed by OpenAI rely on vast amounts of data to improve. Each new model typically brings enhanced performance across tasks, thanks to the availability of high-quality data and significant computational resources. However, as OpenAI’s models grow more advanced, the supply of new, high-quality training data is becoming scarce. This scarcity could explain why Orion’s performance gains appear less substantial than past upgrades.

A decline in data availability isn’t unique to OpenAI; it’s a broader industry issue. High-quality, labeled datasets are crucial for training powerful AI models. But with fewer new datasets available and existing ones becoming saturated, companies are exploring creative ways to work around this limitation. OpenAI’s choice to train Orion with synthetic data is one such approach, leveraging artificial data to fill the gaps left by dwindling real-world sources.

OpenAI’s Foundations Team and Synthetic Data

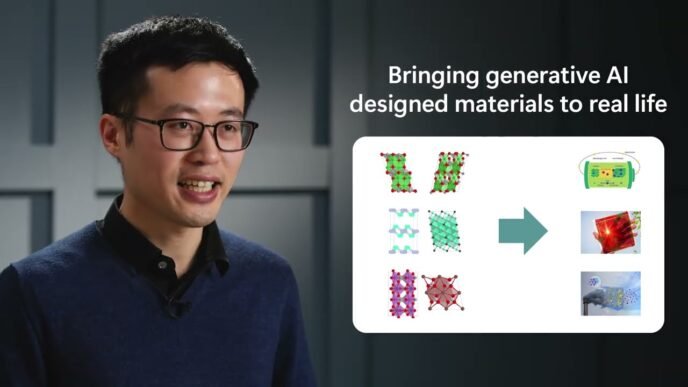

To address the data challenge, OpenAI has reportedly established a specialized foundations team. This team is tasked with researching and implementing novel techniques to advance the company’s models, even in a data-scarce environment. One strategy the team is exploring is synthetic data generation. Synthetic data is produced by AI models, often mimicking patterns observed in real-world data. This process allows the model to learn from artificially generated scenarios, expanding its knowledge base.

The use of synthetic data in AI training is still relatively new, but it holds promise. By supplementing real-world data with synthetic data, OpenAI aims to create more comprehensive models that can generalize better across various applications. Synthetic data generation, however, comes with its own set of challenges. Models must be carefully fine-tuned to ensure that synthetic data maintains realistic and meaningful patterns, avoiding potential biases or inaccuracies.

OpenAI is also reportedly working on improving models post-training. This involves optimizing a model’s capabilities after the initial training phase through techniques such as reinforcement learning and fine-tuning on specific tasks. Post-training optimization could help models like Orion overcome performance gaps in areas where real-world data alone may not be sufficient.

Implications for AI Development and the Future of Language Models

The slowdown in AI improvement raises important questions about the future trajectory of AI advancements. While AI has made great strides, this bottleneck highlights the limitations that could arise as models reach their maximum potential under existing methodologies. The success of OpenAI’s new strategies will be crucial not only for the company’s future but also for the broader AI community, as other organizations face similar data challenges.

AI research is moving towards finding efficient ways to build models that perform well with limited data resources. Techniques such as transfer learning (leveraging pre-trained models for new tasks) and unsupervised learning (training models without labeled data) may become even more vital as data scarcity grows. OpenAI’s efforts with synthetic data and post-training improvements signal a shift towards sustainability in AI development, where existing data resources are maximized for better outcomes.

OpenAI’s Response and Future Prospects

OpenAI has not yet responded to requests for comment regarding Orion or the reported development slowdown. In a prior statement, OpenAI mentioned that it had no plans to release a model named Orion within the year. While this suggests that Orion’s official release may still be some time away, OpenAI’s current focus on foundational improvements could influence the development of Orion or future models. As OpenAI and its competitors adapt to these new challenges, we may see additional innovative techniques emerge, shaping the next generation of AI models.

Despite the potential slowdown, OpenAI’s efforts to overcome these limitations could yield new methods that drive the industry forward. AI development remains a highly competitive and evolving field, and each breakthrough helps bring new capabilities closer to practical applications.

Conclusion: The Path Forward for OpenAI and the AI Industry

OpenAI’s journey with Orion highlights a key challenge facing advanced AI development: sustaining model improvements with limited new data. The company’s efforts to mitigate this through synthetic data and post-training optimizations may define a new direction for the industry. As AI research shifts to make better use of existing resources, the methods developed could benefit not only language models but also applications in robotics, healthcare, and finance.

This ongoing evolution underscores the importance of data-efficient AI development. If OpenAI’s strategies with Orion prove effective, they could offer a blueprint for other AI developers facing similar constraints. As more information becomes available, the AI community will be keen to see whether OpenAI’s latest innovations enable continued progress in AI model performance.

For further studies on OpenAI’s advancements and the broader AI industry: