Neural networks have become the backbone of modern artificial intelligence, powering everything from natural language processing to image recognition. These networks, which mimic the way our brains operate, are built by interconnecting perceptrons—simplified representations of biological neurons. Recently, at a leading machine learning conference in Vancouver, researchers unveiled a groundbreaking concept: hardware-embedded neural networks. This innovative approach promises to significantly enhance performance while reducing energy consumption.

The Current State of Neural Networks

Traditional neural networks rely heavily on perceptrons, which, despite their power when scaled, come with significant energy costs. For instance, large models like GPT-4 and Stable Diffusion consume vast amounts of energy during training and operation. Microsoft’s recent deal to reactivate the Three Mile Island nuclear facility for AI processing power underscores the growing energy demands of these technologies.

- Energy Statistics: Research indicates that training a single state-of-the-art neural network can emit as much carbon as five cars over their lifetimes.

Limitations of Software-Based Neural Networks

The inefficiencies inherent in software-based neural networks stem from the need to translate network designs into hardware instructions, a process that incurs both time delays and energy costs. This translation from high-level abstractions to hardware execution leads to significant performance bottlenecks, particularly in real-time applications.

- Latency Issues: Studies show that traditional neural networks can introduce latency of several milliseconds, which can be detrimental for applications requiring immediate responses, such as autonomous driving.

Advantages of Hardware-Embedded Neural Networks

Hardware-embedded neural networks present numerous advantages, particularly in speed and energy efficiency. By programming networks directly into chip hardware, researchers can achieve faster image recognition and reduce energy usage dramatically.

- Speed Improvements: A recent study found that hardware-embedded networks can process images up to 100 times faster than their software counterparts.

- Energy Efficiency: Data suggests that these networks can use energy more efficiently—potentially consuming less than 1% of the energy required by traditional networks.

Technical Insights into Hardware-Embedded Neural Networks

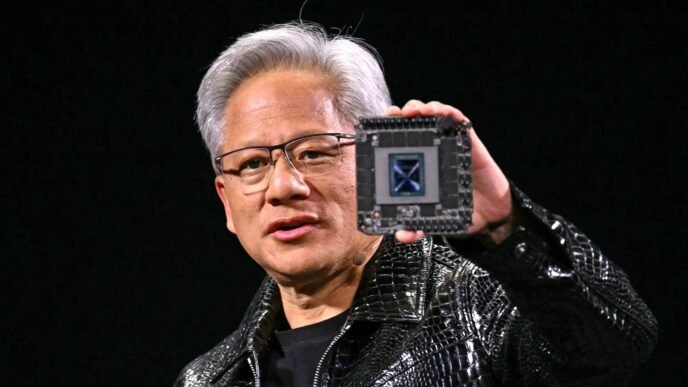

The technology behind hardware-embedded networks primarily involves logic gates, the fundamental building blocks of computer chips. Unlike traditional GPU-based systems, which rely on complex software layers, these networks can operate directly on hardware.

- Types of Hardware:

- FPGAs (Field-Programmable Gate Arrays): Flexible chips that can be configured to emulate various logic gate patterns.

- ASICs (Application-Specific Integrated Circuits): Non-programmable chips optimized for specific tasks, offering lower costs and higher efficiency.

However, developing these networks comes with its own set of challenges, including design complexities and the need for significant initial investment. The scalability of these networks also poses questions, as adapting them to meet varying demands can be intricate.

Future Directions and Implications

The potential impact of hardware-embedded neural networks spans several industries, including healthcare, automotive, and robotics. For instance, in healthcare, these networks could enable real-time diagnostics and personalized treatment plans, while in automotive technology, they could enhance the capabilities of self-driving systems.

- Emerging Technologies: The integration of hardware-embedded networks with quantum computing and other advanced technologies could revolutionize AI, leading to unprecedented advancements.

Predictions suggest that as hardware-embedded networks evolve, they will play a crucial role in the next wave of AI developments, potentially transforming consumer technology and our everyday devices.

By exploring the potential of hardware-embedded neural networks, researchers are paving the way for a more efficient and sustainable future for AI. The balance between innovation and energy sustainability is critical as we navigate this exciting frontier.

Also Read:

Demystifying Artificial Neural Networks: A Beginner’s Guide