Imagine teaching a computer to recognize your handwriting or to understand spoken language. Sounds complex, right? Thanks to Artificial Neural Networks (ANNs), these feats are not only possible but are becoming commonplace in our daily lives. In this blog, we’ll unravel the mysteries of ANNs, explaining what they are, how they work, their applications, future prospects, and how you can start learning about them.

What Are Artificial Neural Networks?

Inspired by the Human Brain

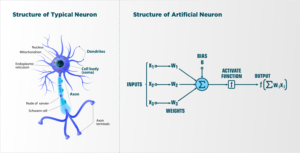

Artificial Neural Networks are computational models inspired by the human brain’s structure and function. Just as our brains consist of interconnected neurons that transmit signals, ANNs are made up of layers of interconnected nodes or “neurons” that process data.

The Building Blocks

• Neurons (Nodes): The fundamental units that receive inputs, process them, and produce an output.

• Layers: Neurons are organized into layers—input layers receive data, hidden layers process data, and output layers produce the final result.

• Weights and Biases: Connections between neurons have weights that adjust as the network learns, and biases that shift the activation function.

How Do Artificial Neural Networks Work?

The Learning Process

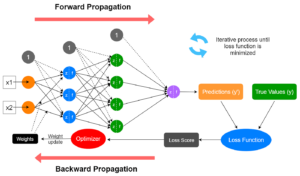

1. Data Input: Data is fed into the input layer.

2. Forward Propagation: Data moves forward through the network, layer by layer.

3. Activation Functions: Neurons apply activation functions to inputs to introduce non-linearity.

4. Output Generation: The network produces an output, such as a prediction or classification.

5. Error Calculation: The output is compared to the expected result, and errors are calculated.

6. Backward Propagation: Errors are propagated back through the network to adjust weights and biases.

7. Iteration: Steps 1-6 are repeated until the network’s performance is satisfactory.

Types of Activation Functions

• Sigmoid Function: Useful for probabilities.

• ReLU (Rectified Linear Unit): Effective for hidden layers.

• Softmax Function: Used in the output layer for classification tasks.

Technical Aspects Simplified

Supervised vs. Unsupervised Learning

• Supervised Learning: The network learns from labeled data (input-output pairs).

• Unsupervised Learning: The network finds patterns in unlabeled data.

Overfitting and Underfitting

• Overfitting: The model learns the training data too well, including noise, reducing its ability to generalize.

• Underfitting: The model is too simple to capture underlying patterns.

Regularization Techniques

• Dropout: Randomly ignores neurons during training to prevent overfitting.

• Early Stopping: Stops training when performance on validation data stops improving.

Everyday Applications of Artificial Neural Networks

1. Voice Assistants

• Examples: Siri, Alexa, Google Assistant.

• Function: Use ANNs for speech recognition and natural language processing.

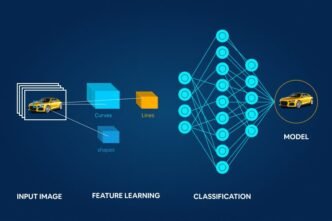

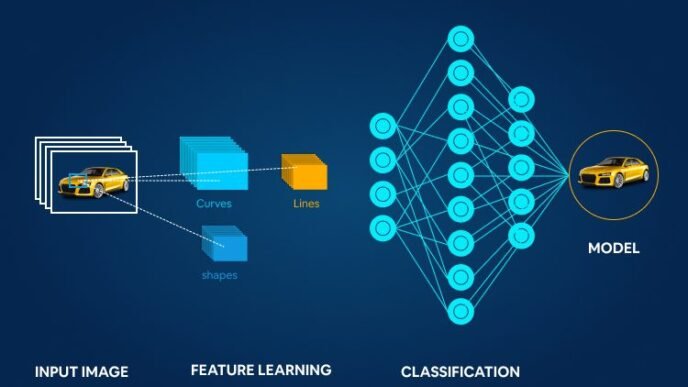

2. Image Recognition

• Examples: Facial recognition in smartphones, tagging friends on social media.

• Function: Convolutional Neural Networks (a type of ANN) analyze images.

3. Recommendation Systems

• Examples: Movie suggestions on Netflix, product recommendations on Amazon.

• Function: Analyze user behavior to predict preferences.

4. Healthcare

• Examples: Disease detection, personalized treatment plans.

• Function: ANNs analyze medical images and patient data.

5. Finance

• Examples: Fraud detection, stock market prediction.

• Function: Identify patterns and anomalies in financial data.

The Future of Artificial Neural Networks

Advancements on the Horizon

• Deep Learning: Building deeper networks for more complex tasks.

• Reinforcement Learning: Networks that learn from interactions with the environment.

• Neuromorphic Computing: Hardware that mimics neural network architectures for efficiency.

Potential Impact

• Automation: Increased efficiency in industries like manufacturing and logistics.

• Personalization: Enhanced user experiences across services.

• Healthcare Innovations: Improved diagnostics and treatment options.

How to Learn Artificial Neural Networks Efficiently

1. Grasp the Fundamentals

• Mathematics: Basics of linear algebra, calculus, and statistics.

• Programming: Proficiency in languages like Python.

2. Start with High-Level Frameworks

• TensorFlow: An open-source library developed by Google.

• PyTorch: An open-source library by Facebook.

3. Hands-On Practice

• Build Simple Models: Start with basic networks to solve simple problems.

• Participate in Competitions: Platforms like Kaggle offer real-world datasets.

4. Online Courses and Tutorials

• Coursera: Courses like Andrew Ng’s “Machine Learning.”

• edX: Offers courses from top universities.

• YouTube Channels: Free tutorials and explanations.

Learning Resources for Further Exploration

Books

• “Neural Networks and Deep Learning” by Michael Nielsen

• “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville

Online Platforms

• Coursera: Machine Learning Specializations

• Udemy: Various courses on neural networks and AI.

• Kaggle: Kaggle Learn offers micro-courses.

Communities and Forums

• Stack Overflow: For programming questions.

• Reddit: Subreddits like r/MachineLearning and r/learnmachinelearning.

• GitHub: Explore repositories and contribute to projects.

Conclusion

Artificial Neural Networks are at the heart of the AI revolution, transforming the way we interact with technology. From personal assistants to medical diagnostics, their applications are vast and growing. By understanding the basics and engaging with the wealth of resources available, anyone can start their journey into the fascinating world of ANNs.

Embarking on learning about Artificial Neural Networks not only equips you with cutting-edge skills but also places you at the forefront of technological innovation. The future is neural, and it starts with you.